Video for this episode of the series

About the author

At Gappex, Tomáš Rolc develops the business side of the SmartFP platform, which helps companies digitise processes using a low-code approach. He strives to connect technical knowledge with business and focuses on making SmartFP accessible and understandable to as many users as possible.

Legal and ethical notices for AI videos and audio

Let’s take a look together at how AI is making video and audio easier to work with

The purpose of this episode is to show how artificial intelligence can help you create video content and audio recordings. From generating footage and animations to narrating commentary or dubbing, AI can speed up creative activities in marketing. You don’t have to be afraid to experiment, you just need to know what to expect from it and where its limits are.

As in the previous episodes, the best results come from a combination of AI and humans. AI can generate a video or voice track in a flash based on instructions, but it needs your supervision and creative guidance. In practice, it works best when a human sets the script and AI helps with execution – designing visuals, editing footage, providing voiceover. AI won’t do the work for you entirely on its own, but when used properly, it allows you to be significantly more efficient – creating content faster, trying different variations easily, and focusing more on ideas than technical details. It’s like having the entire production on one computer, but you’re still the director.

Example of using AI to work with video and audio 1/5:

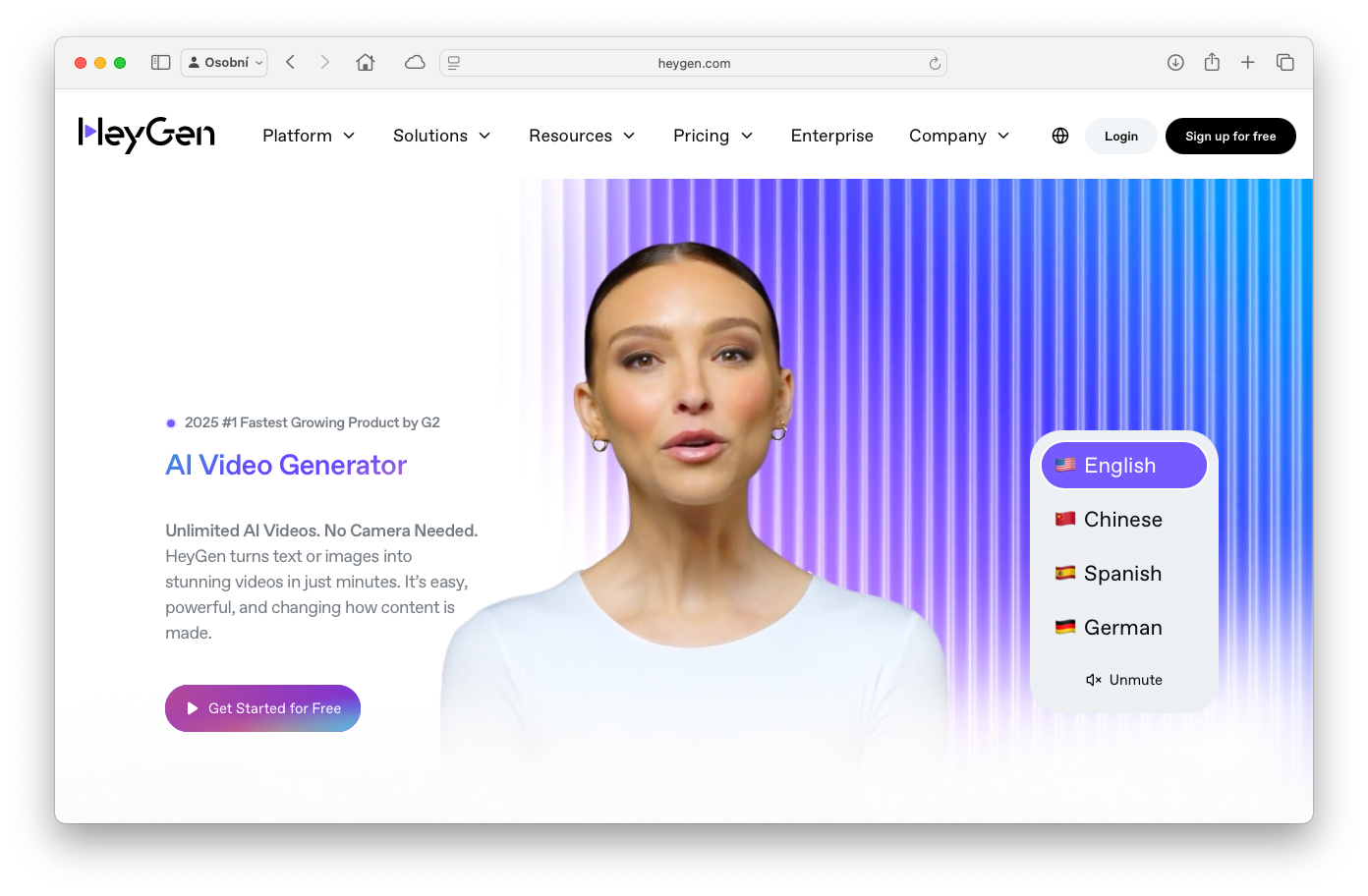

HeyGen: video from text using AI avatars

Do you spend hours making videos because you need to present something clearly? Or do you simply not like to see yourself on camera? The solution is an AI avatar – a virtual character that says the prepared text for you. HeyGen is an online tool that lets you create such a video in minutes. Just write a script, choose an avatar (digital “actor”) and set the voice – HeyGen then generates a video clip where your chosen avatar speaks your text with faithful facial expressions and gestures.

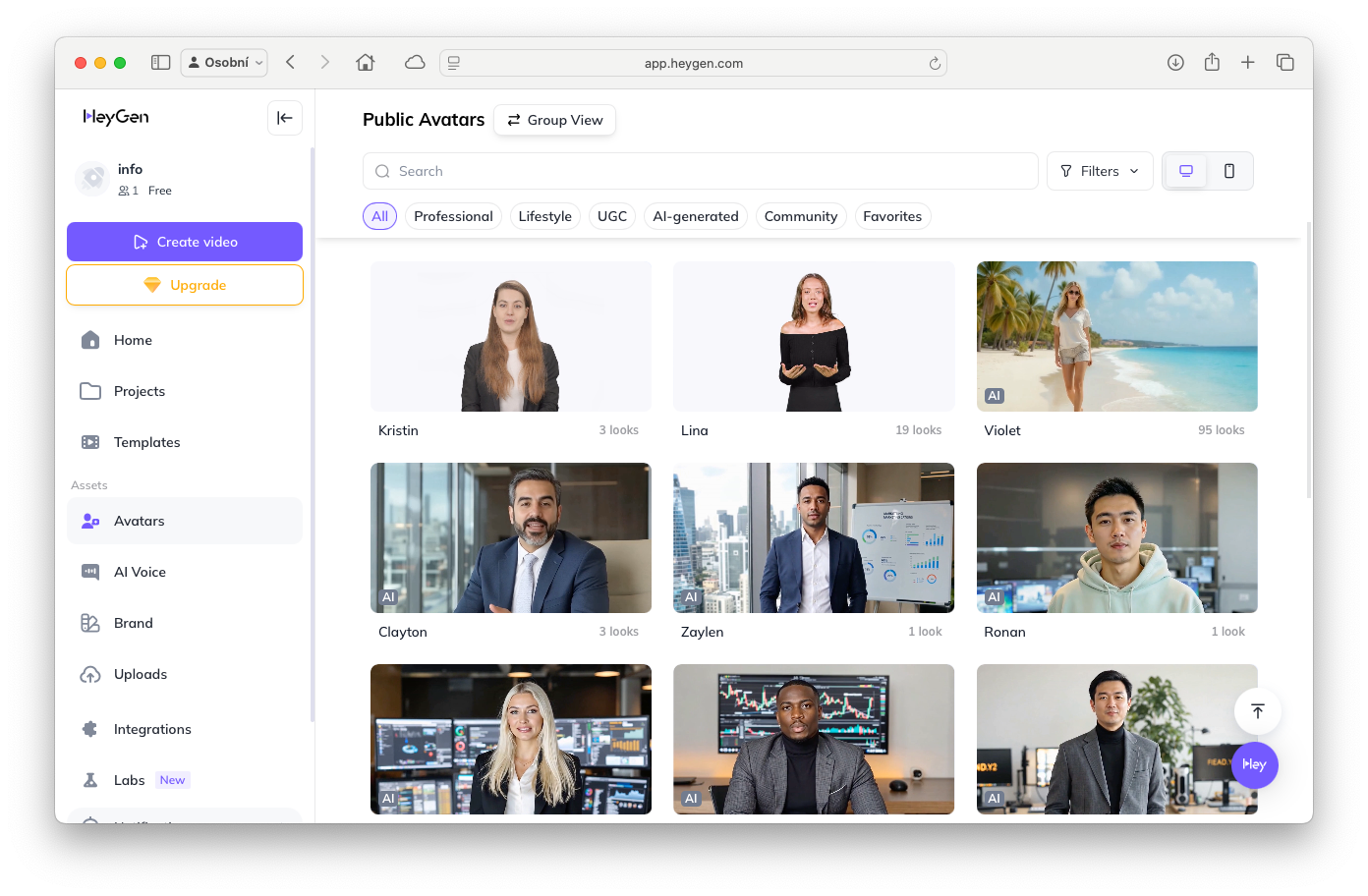

HeyGen offers dozens of pre-made avatars of different looks, genders and styles. You can choose a businesswoman in a suit, a lecturer in a casual T-shirt or a young influencer, depending on what fits the content of the video. More than 30 languages are supported, including Czech, so your avatar can speak Czech (accents are minimal). Just type the text in Czech and select a Czech voice from the menu. HeyGen has hundreds of different voices to choose from – from male and female, formal and friendly, to different accents. So you can easily adjust the tone of speech to suit your needs.

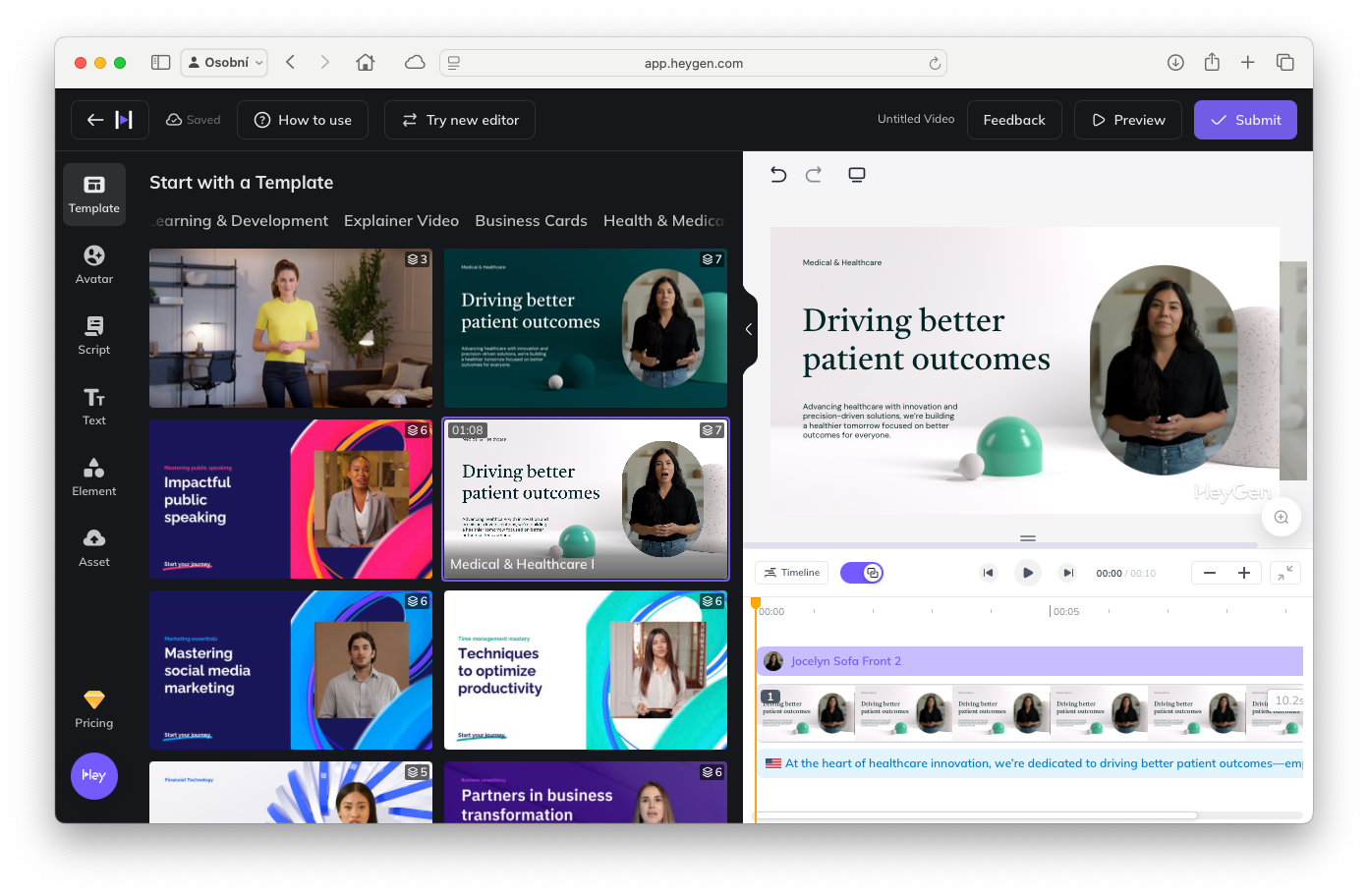

The video creation process itself is very simple: after registering on HeyGen, you choose a video template (or start with a blank canvas), insert text, choose an avatar and voice, and start generating with one click. In a few minutes, you’ll see the finished video, which you can play and download. On the screen, you see a preview of the avatar and next to it a text editor for the script – so editing is quick (see image below). If you don’t like the result, just change the text or other settings and let the AI re-generate the video.

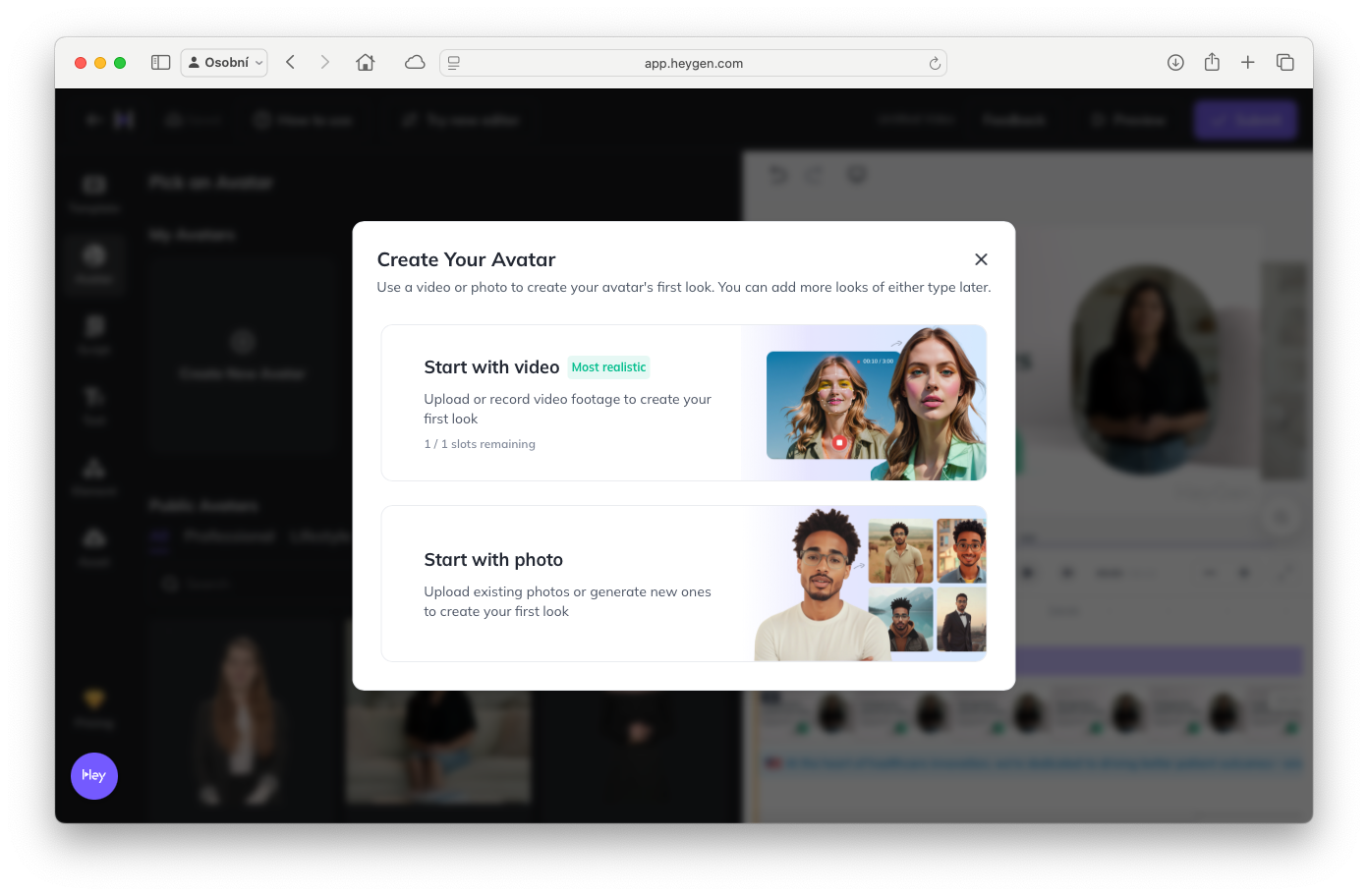

What if you want something more distinctive than pre-made characters? HeyGen also allows you to create your own avatar that looks (and sounds) like yourself, or perhaps a colleague, company mascot, etc. There are two main methods: either upload a short video of the person speaking (about 2 minutes of footage), or upload a set of photos of the face (10-15 shots from different angles are recommended). The AI will use this footage to create a digital copy – an avatar that mimics the look, facial expressions and to some extent the voice of the original.

This feature must first be activated in the HeyGen settings ( Custom Avatar section) and you should expect that it may take a few hours to create a custom avatar. Once it’s ready, it will appear in your library and you can use it just like any other – just give it a text and it will speak your “digital self”. For detailed instructions on how to create your own avatar HeyGen has posted a clear step-by-step process – I recommend you check out their help section, we also show the process in our video.

The AI avatar from HeyGen is suitable for explainer videos, product presentations, e-learning or web welcome videos – in short, anywhere you want to say something in person but can’t or don’t want to be physically in front of the camera. The resulting videos are surprisingly believable. Of course, the observant viewer can tell that it’s animation (the movements can be a bit jerky at times), but for everyday use the quality is more than sufficient. For a fraction of the time and cost, you can create content that would otherwise require a camera studio, actors, or your own filming.

Take a look at how we described one of the functionalities of our SmartFP platform using HeyGen:

Tip: Many users also use HeyGen to localize videos – they shoot a video once (in English, for example) and then let HeyGen speak the avatar into other languages. This way they don’t have to reshoot, they just swap the voice and subtitles. The AI will take care of lip-syncing the avatar to the new language. So you can easily “translate” the video into Czech, Slovak, English and German, for example – and the same character will speak everywhere, just in a different language.

Example of using AI to work with video and audio 2/5:

Sora: video generation and editing by OpenAI

Now we’ll move from talking avatars to more general video creation. Imagine you could write any scenario as text and the AI would make a video based on it. That’s exactly what OpenAI’s new product called Sora promises. It’s an advanced tool for generating video from text, an image or a short video clip. Sora can create video clips of up to 20 seconds based on a text description – for example, you describe a scene “sunset over the ocean, camera slowly rising up” and the AI generates a moving shot matching the description.

But Sora does much more than just text → video. It features a full video editor with artificial intelligence. Key features include:

- Remix video: You upload your own short video and text what you want to change in it. For example, you can rewrite the “change day to night scene with rain” scene and the AI will make the appropriate adjustments to the video.

- Storyboard (timeline): you can combine multiple generated scenes. Sora has an editor where you can lay out a sequence of clips in a timeline – for example, first landscape shot, second close-up on a character, third action move. The editor lets you adjust how the scenes build on each other, and keep a consistent style across the entire video.

- Loop: With a single button, Sora can edit the video so that the last frame follows the first – creating a perfectly smooth loop. This is useful for animated web backgrounds or endless GIFs, for example.

- Blend: Sora can take two different video clips and combine them into one – intelligently creating a transition between them. This allows you to blend different scenes or styles to create interesting effects (e.g. a jellyfish floating through the treetops).

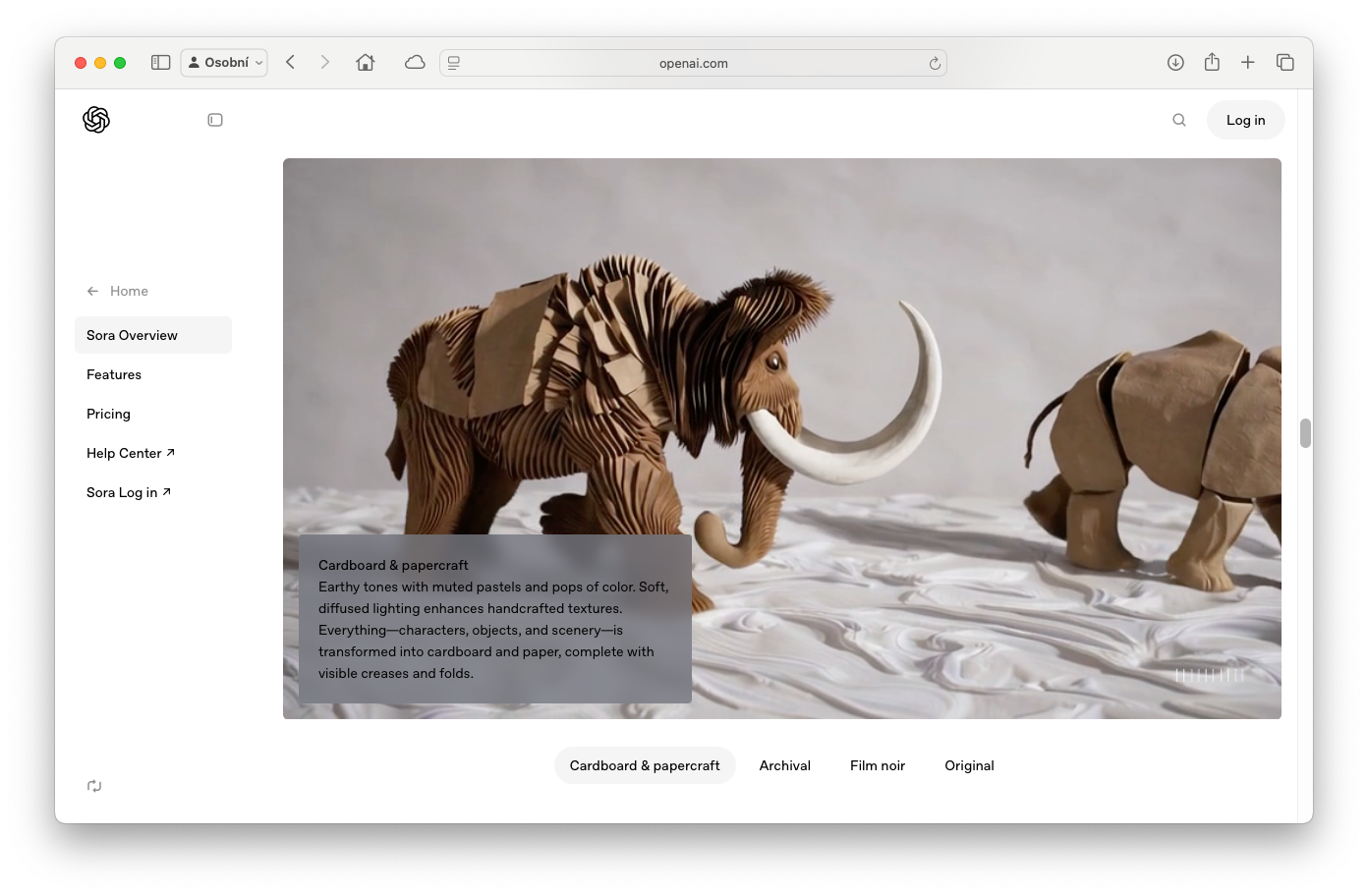

- Presets: Preset styles are available to give your video a consistent look. E.g. “film noir” (black and white with high contrast), “paper cutout” (everything looks like cardboard), “retro archive”, etc. You can let the AI generate a video in a specific aesthetic without having to tweak every detail.

Sora is simply a small AI film studio. 🙂 In practice, it has to be said that the outputs are still limited in length (max 10 seconds for normal users, 20 seconds for higher plans) and generating more complex scenes can be challenging (the AI sometimes struggles with details like accurate faces or text in the image). But this is a huge shift – just a year ago, something like this was unimaginable. Today, you can “shoot” your own sci-fi footage or animate a static illustration with a few clicks.

How to try Sora? Sora is currently integrated into the ChatGPT web application. If you have a ChatGPT Plus subscription, you can also access the image and video features – just visit the Sora website and log in with the same details as ChatGPT.

Tip: You’ll appreciate Sora even if you don’t want to go into a film all your own. For example, it’s great for livening up presentations or websites. Instead of a static background, you can use Sora to create an impressive animation (like an abstract loop or nature motif). Social media marketing can also benefit from AI videos – you can produce short, engaging spots in no time. And if you enjoy experimenting, Sora is a toy for creatives – you can try unrealistic combinations (e.g. “pencil-drawn dinosaur playing guitar on the moon”) and be surprised by what the AI conjures up.

Example of using AI to work with video and audio 3/5:

SmartFP: planning and managing marketing campaigns

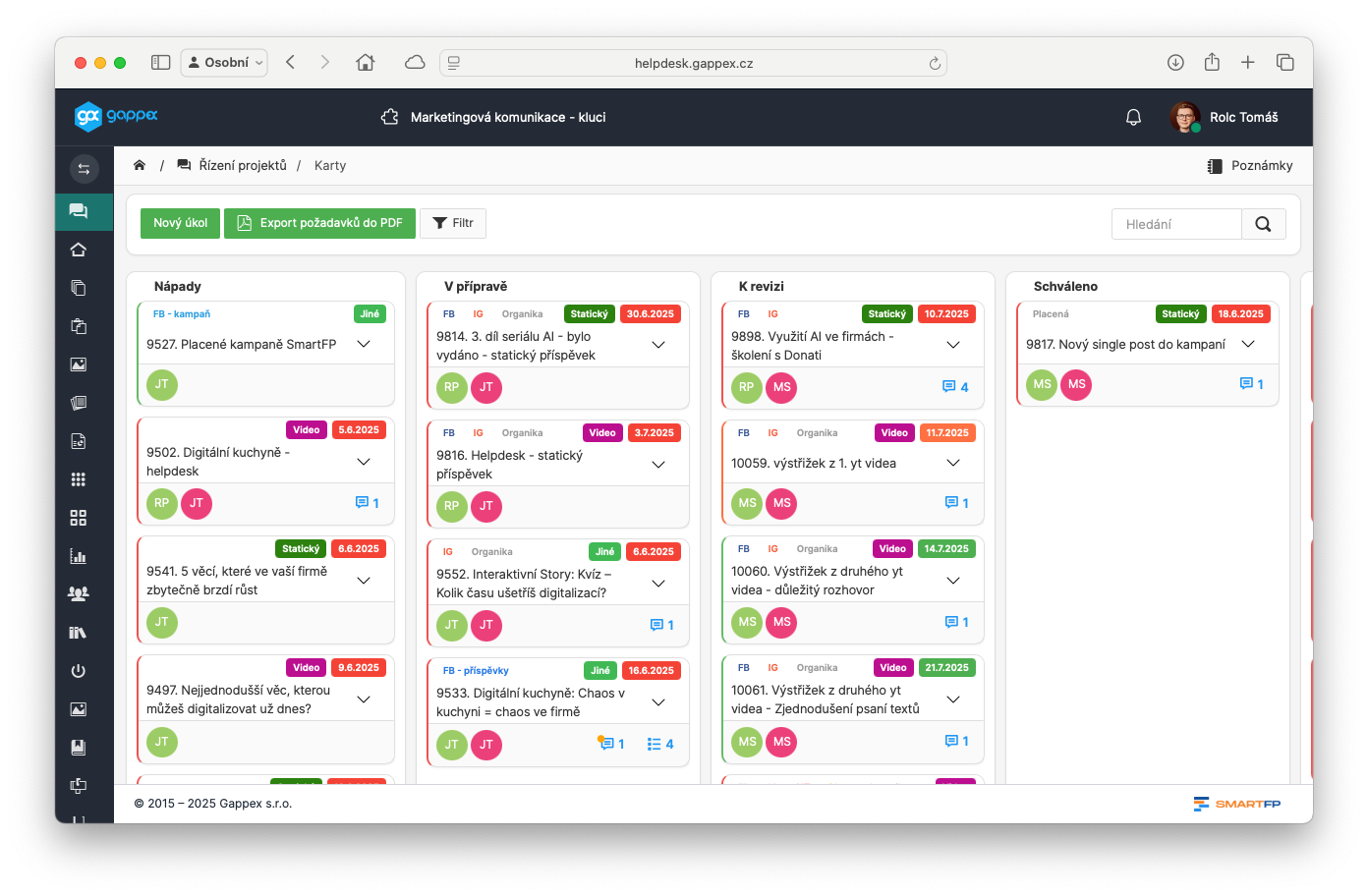

In the previous examples, we created content – either video or audio. But a great tool to help you in the next phase is a tool that is not generative AI per se, but will help you organize everything. What good are the best AI creations if you have nowhere to collect them neatly, plan their publication and ensure team collaboration? That’s what the SmartFP platform has a module for Project Management (FastTask). We will show how it can make it easier for the marketing team to plan and approve social media posts or manage campaigns in general.

The Project Management module is designed to facilitate effective planning, tracking and coordination of tasks within a team. For example, you can create a “Summer Marketing Campaign” project, add all sub-tasks to it (e.g. “Create trailer video”, “Write text for posts”, “Schedule Facebook posting”, “Approve visuals with management”, etc.) and assign them to colleagues with deadlines. You can see each task on a clear kanban board – which displays columns like To-Do, In Progress, Done, etc. You drag and drop tasks between columns based on what stage they’re in, giving everyone an instant overview of the campaign’s status.

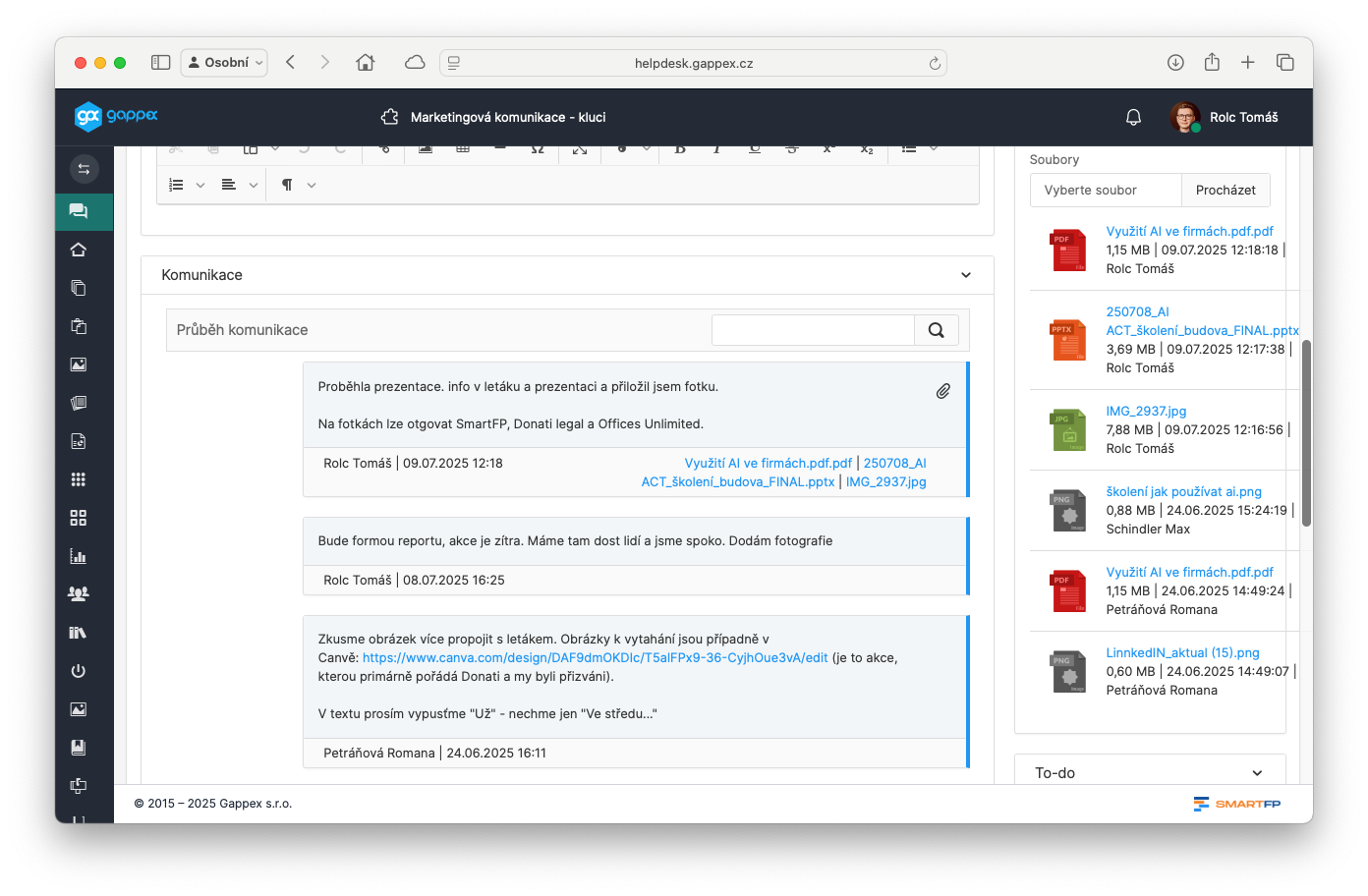

Communication within the team takes place directly at the tasks – each task has its own discussion, change history and the ability to attach files. For example, a graphic designer uploads a banner design to a task (like the AI-generated image in the previous episode 😊) and marks the task as pending approval. The person responsible gets a notification (via email or in the app) and can take a look, comment, or move the task forward. All changes (moving to another state, new comment, editing text…) are logged with time on the task timeline – so you can see who did what and when at any time. This transparency prevents the confusion of something getting lost in an email or forgotten.

A great feature of the FastTask module is the fast recording of ideas and requests (WIndows, Outlook and Chrome required). For example, when you get an email from a client requesting marketing material, just drag and drop it into the SmartFP window – a new task is automatically created with the pre-filled title and description from that email. Similarly, you can insert a screenshot with one click: press Print Screen or Ctrl+V and the screenshot is attached to the task – great for visual reminders (“edit this part of the site here”, etc.).

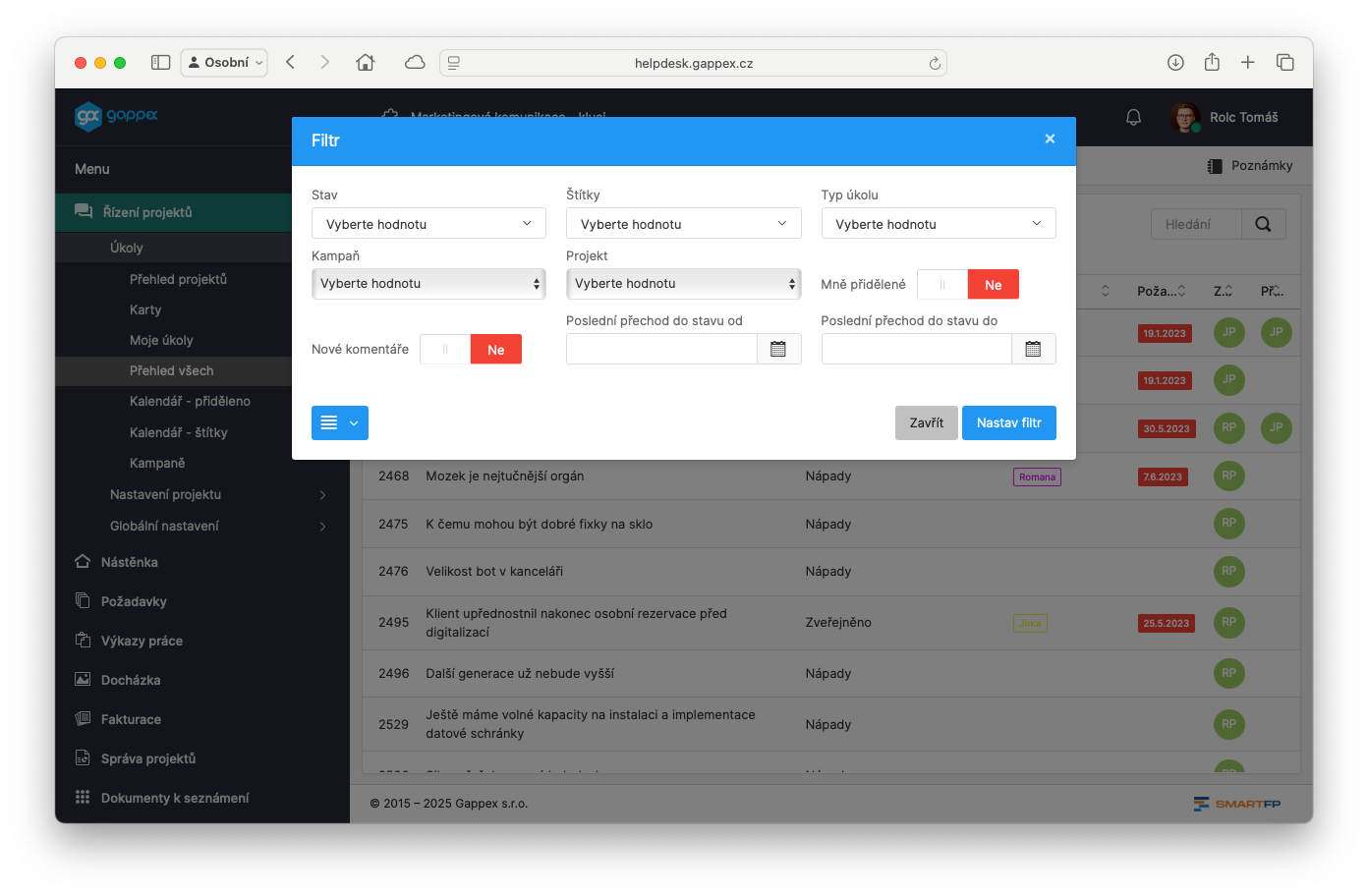

From a team leader’s perspective, the module also offers filtering and reports. You can view all tasks of one employee across projects, or conversely all tasks that have a deadline this week. This makes it easy to discover who’s overloaded, what’s on fire, and where there’s spare capacity. Approving deliverables is also important for marketing campaigns – in SmartFP, you simply implement that a task has a status of “Pending Approval” and the person responsible either approves it (moves it to Done) or returns it with a comment to Work in Progress. This way you are in control of the whole process and nothing is published without approval.

The Project Management module is versatile: it can be used not only by marketers, but also by development teams, sales departments for bid management, HR for recruitment, etc. It is a flexible tool for any project management. If you’re interested in this demo, we’ d be happy to set up a demo and show you how the module would work in your environment.

Tip: Think about where in your process you could use a project management tool and let us know.

Example of using AI for video and audio 4/5:

OpenArt: creating videos with a consistent character

When we talked about images in the last installment, we mentioned that the AI sometimes struggles with consistency – if you generate ten images of the “same” person, each one may look slightly different. This is a problem with video: in each frame, the actor’s facial features would change! That’s why tools are being developed that focus on keeping the character consistent across multiple shots. One such tool is OpenArt – originally an AI image generation platform that now offers advanced models for video.

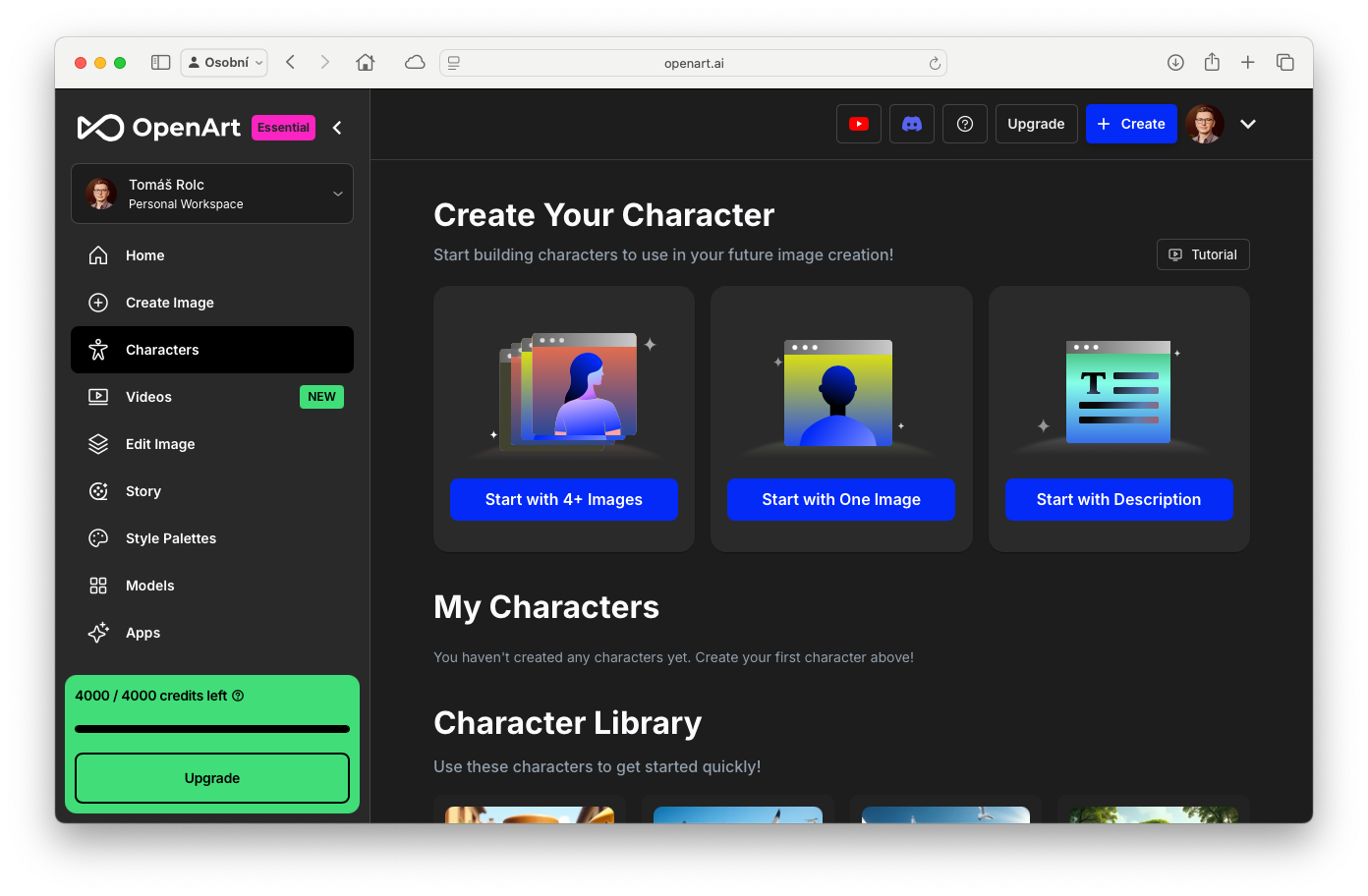

OpenArt has a feature called Characters, which allows you to create your own character and use it repeatedly. The process is that you upload one or more pictures of the character (it can be a real person – your selfie, for example, or even a fictional cartoon character) or simply describe verbally what it should look like. The AI will then generate a model of that character and you can give it a name. Then, whenever you create an image or video in OpenArt, just put a link to that specific character in the prompt and the AI will make sure the result matches its appearance.

Example: let’s say you create the character “Anna – a young brunette in a red dress”. You let OpenArt generate several images, choose the one you like best, and save Anna as your character. Now you want to create a comic story about Anna – so you need a series of images where Anna appears in a different situation each time, but still looks like Anna. With a regular generator, it would happen that she would have a different nose one time, different eyes the second time… With OpenArt Characters you say: “[Anna] is sitting in an office typing on a computer, comic book style”. The AI will generate a picture of Anna at the computer. Next scene: “[Anna] running on the beach at sunset ” – you get Anna again, this time moving on the beach. And so on. The whole comic has a single protagonist.

This is great for brand characters, mascots, or maybe when you want to use the same AI “actor” in different visuals in marketing. You don’t have to tweak the prompt to “look alike” every time – OpenArt will take care of the feature matching automatically.

But OpenArt doesn’t stop at just static images. Thanks to built-in models (such as Kling 2.1 – one of the latest AI video models), you can also generate short videos. Crucially, the consistency of the character also applies over time – that is, within the video. If you use a defined character, the AI tries to keep it the same from the first to the last frame of the video. This solves the problem where older AI video models had “floating” faces (the character looked a little different in each frame, which the eye would recognize as unnatural). With OpenArt, this should happen less.

How to use OpenArt? Just register at openart.ai – basic image generation is free (you get a certain amount of credits per day). For more advanced features, longer videos or training your own characters, you need a paid plan or purchase credits, but you don’t need to pay anything to try it out.

Select Characters in the toolbox and create a test one. For example, you can upload one photo of yourself and let the AI retrain the character. Then try generating a simple image using that character (use the name or link to that character in the prompt – OpenArt’s instructions will show you). See how the AI renders the scene with you as the “actor”. It’s a bit sci-fi, but it works! 🙂 Once you’ve played around with images, switch to Video (OpenArt supports generating video – either purely from text or from an image, like Sora). Type in what you want your character to do, and you’ll get an animated clip in no time.

Hint: OpenArt recently added Lip Sync – the ability to move the character’s lips according to the soundtrack. You can record your own audio (such as the commentary created in ElevenLabs, discussed below) and OpenArt will generate a video of your AI character articulating the exact speech you recorded. This actually links the voice clone to the animated character – the result is a talking avatar very close to reality. This technology is still nascent, but it hints at a future where anyone will be able to “play” in their virtual studio with actors and voices at will.

Example of using AI for video and audio 5/5:

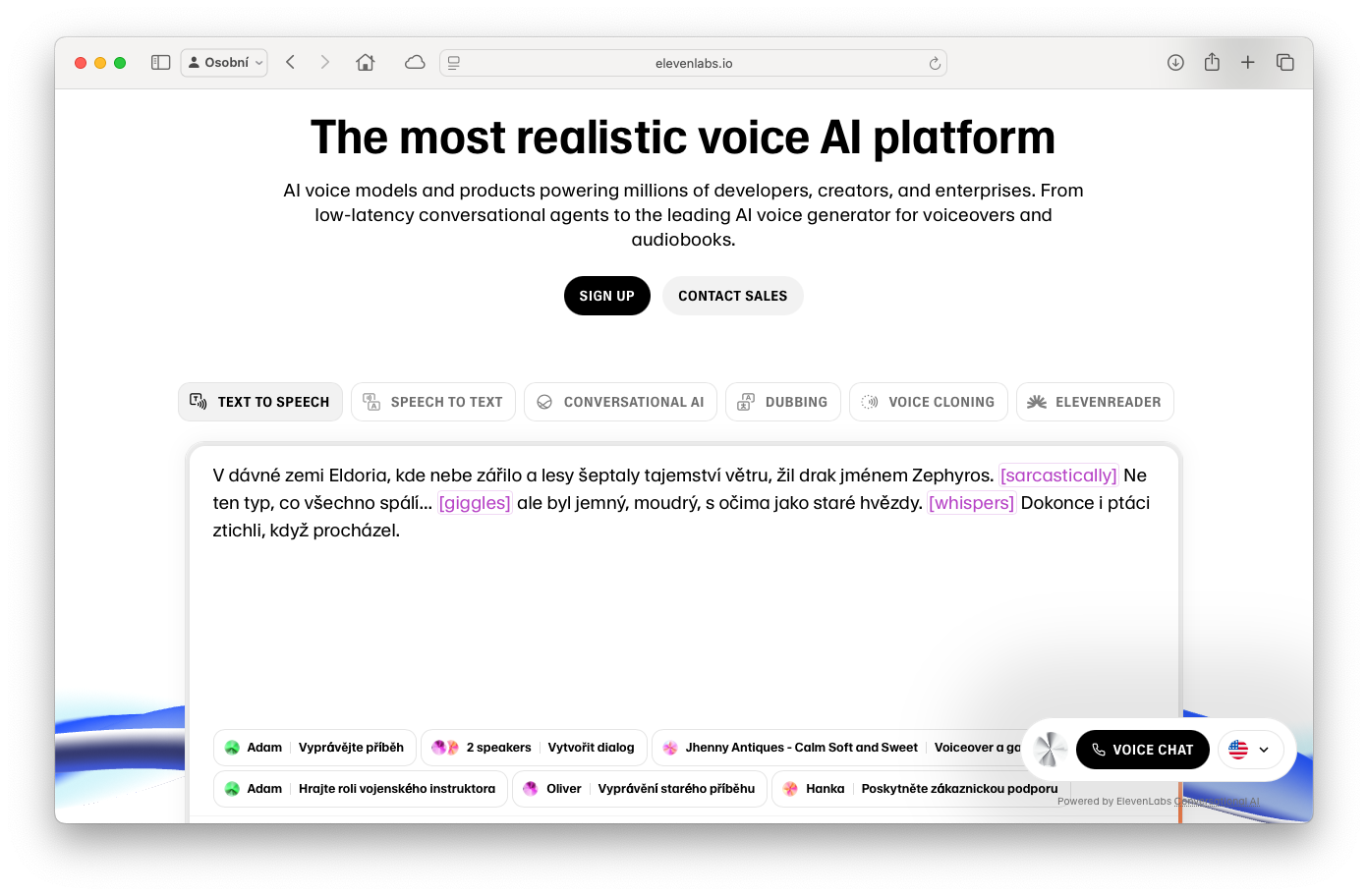

ElevenLabs: text-to-speech and copy of your own voice

Finally, let’s look at the area of sound – specifically synthetic voice. Whether you need to narrate a video, create an audio version of an article, or even a voice for your chatbot, you don’t need to hire a professional actor. High-end AI models today can read text indistinguishable from a human voice. One of the most well-known tools is ElevenLabs.

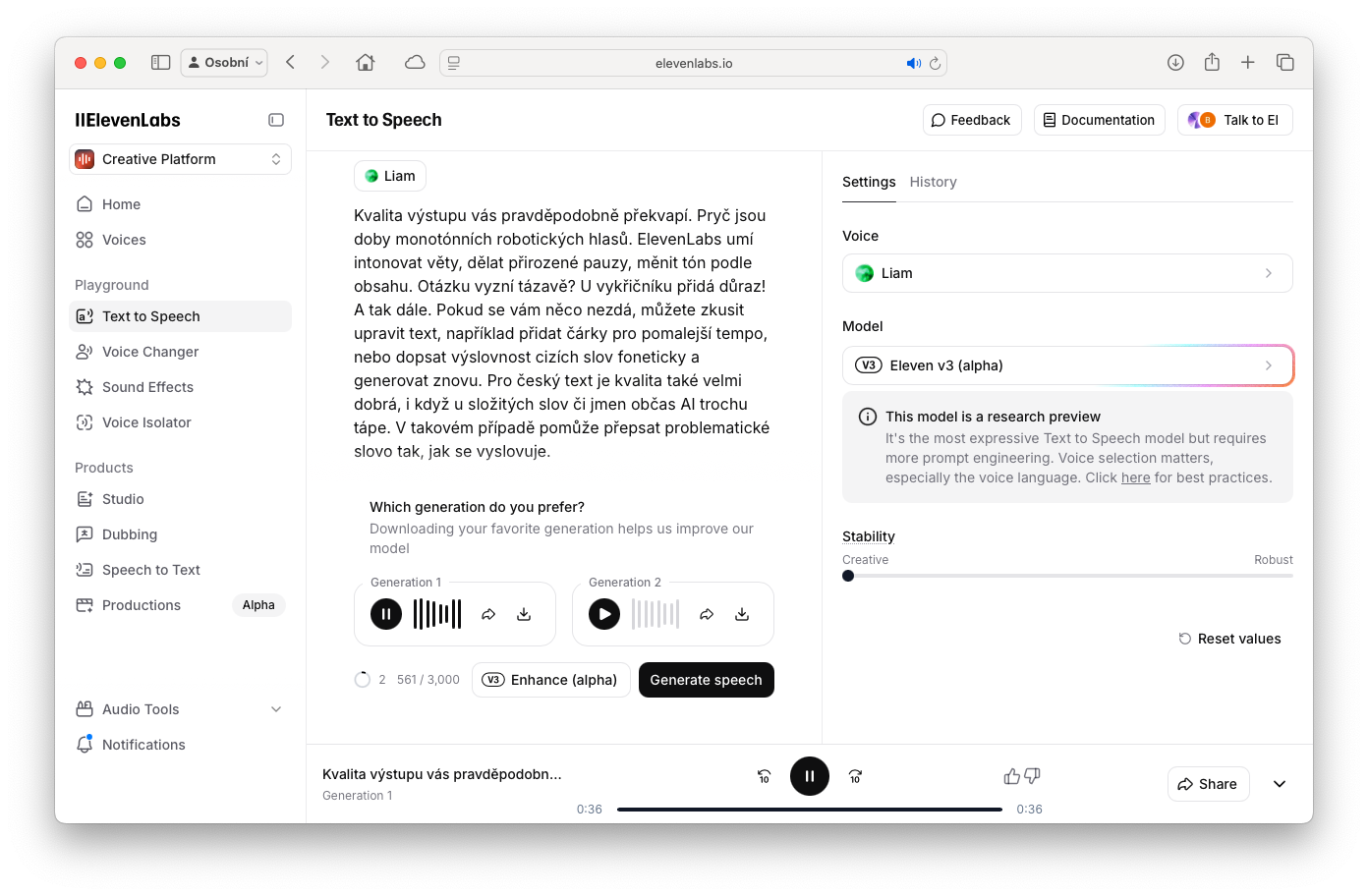

ElevenLabs allows you to simply insert any text and have it converted to spoken word. It supports many languages (including English) and offers very natural sounding voices. After registering for ElevenLabs, you can get started right away – open the Speech Synthesis section, type or paste your text and select a voice from the menu. There are preset voices available (most of them are in English, for Czech you can select e.g. Antonín or Zuzana, which sound like a normal Czech presenter). You click on Generate and within a few seconds an audio file is generated that you can play or download.

The quality of the output will probably surprise you – gone are the days of monotonous robotic voices. ElevenLabs can intonate sentences, make natural pauses, change the tone according to the content (make a question sound questioning, add emphasis to an exclamation mark, etc.). If you don’t like something, you can try editing the text (e.g. add commas for a slower pace, or finish pronouncing foreign words phonetically) and generate it again. For Czech text, the quality is also very good, although for difficult words or names the AI sometimes glitches a bit – in this case it will help to rewrite the problematic word as it is pronounced.

Play the result:

Where does such a synthetic voice come in handy? For example, you can soundtrack an instructional video (like the one you created in HeyGen – instead of the default avatar voice, you deploy your own audio). It’s also great for podcasts or audioblogs – you write an article and with one click you have an audio recording of it that listeners can hear instead of read. Businesses use ElevenLabs for automated phone systems, voice assistants, or even personalized messages (where the customer hears their name in spoken text without anyone manually recording it).

The icing on the cake is the Voice Lab feature, where you can clone your own voice. If you don’t like any of the pre-made ones (or just want it to sound like you), upload a few samples of your voice to the system. All you need is a few minutes of recording – ideally of a clear, noise-free voice. ElevenLabs will use these samples to train a model that speaks almost exactly like you. Then any text you write can sound as if you were reading it yourself. It’s quite a strange experience to hear your voice saying sentences you never said. 🙂

The use is obvious: you can scale yourself – for example, create videos or presentations where you are “you” without having to laboriously coax each output. Podcast creators localize content into foreign languages this way (they teach their voice to speak English, Spanish, etc., giving them foreign versions of their show that still sound like them).

Tip: ElevenLabs excels in speech expression – it can add emotion to the voice. In the interface, you can set Stability and Clarity/Style parameters for the voice. If you lower Stability, the voice will be richer in expression (e.g. for dramatic reading or emotional speech). Increase Clarity for a more formal and cleaner speech (for example, for news). Try playing around with these settings, or use the Voice Styler, where you can add instructions like “say it with enthusiasm” or “in a sad tone” to your text . The AI will then adjust the intonation as if it’s actually experiencing the emotion.

What to take away from this piece

- AI can already generate video and voice, which greatly simplifies the creation of multimedia content. Short spots, animations, presentations or spoken commentary can be created without specialized equipment.

- A virtual avatar (HeyGen) can replace your on-camera presentation – ideal when you don’t want to speak in person or need content in multiple languages.

- Generating video from text (Sora, OpenArt) is still at an early stage, but already allows experimentation with visual ideas. Even without knowledge of editing or animation you can get original clips for your projects.

- Consistent Characters: tools like OpenArt can help you maintain a consistent character look across images and video. This gives a new dimension to building visual identity (mascots, characters in campaigns).

- The AI voice (ElevenLabs) is indistinguishable from a human voice and paves the way for automating audio production – from videos to podcasts to customer support. Cloning your own voice can save a lot of time when recording repetitive text.

- Integration into practice: Integrate all these outputs into your workflows to make them useful. Use project tools (like SmartFP FastTask) to organize work, set clear processes for approving AI-generated content, and be sure to train your team in the ethical use of AI.

- Be accountable: AI puts a lot of power in your hands to create compelling content. Use it creatively but transparently – label the content you generate and respect the privacy and rights of others.

Comparison of AI tools for working with images

| Tool | Functions | Benefits | Price |

|---|---|---|---|

| HeyGen | Creating videos with AI avatars | Easy to use; many avatars and voices; Czech language support; possibility to personalize with your own avatar. | Free: 3 videos per month (720p, up to 3 min). Creator: $29/month (HD, 30 min video, custom avatar). |

| Sora | Generate video from text or image | Advanced video editing features; multiple scene stacking; high quality (1080p) for higher plan. | Part of ChatGPT Plus ($20/month – 720p, 10 s of video) / Pro ($200/month – 1080p, 20 s, faster). |

| SmartFP: Project Management | Project management – campaign planning, tasks, teamwork | Customizable corporate environment; email integration; kanban, timeline, notifications; | License within the SmartFP platform (solution for companies, price according to the scope of deployment – demo option). |

| OpenArt | Generate images and videos with consistent characters | Keeping the same character across outputs; lip-sync feature for talking characters; community shares creations. | Free basic (daily image credits, limited video). Premium credits/plans for multiple generations, faster calculation and advanced features. |

| ElevenLabs | Text-to-speech, cloning the user’s voice | Superior natural voice; support for intonation and emotion; ability to create your own voice. | Free: limited characters/month (approx. 10k characters), no cloning. Starter: from $5/month (more characters, 1 custom voice). |

Recommendations in conclusion

If you have an idea for a specific topic or tool that you would be interested in within AI, let me know! 🙂 This series is being created for you – I’m happy to tailor it to what you’re most passionate about or tempted to try. At the same time, I’d love for you to share your experiences with the tools we’ve covered today. Have you tried creating your own video avatar, or have you had your voice cloned? What have you been excited about and what have you found disappointing so far? Your insights may help other readers and me as we continue to explore the possibilities of AI.

Let’s continue to explore together how AI can make our work and life easier, while learning from it and having some of that futuristic fun. Thanks for watching the series, and I’ll look forward to the next installment!

What awaits us in the next episodes

In the next – already fifth – episode, we will focus on how AI helps us work with information and data. We’ll introduce tools like NotebookLM, the advanced capabilities of ChatGPT, and the Perplexity search engine, which are used to efficiently research, analyze text, and work with large documents. We will show how to use AI as an assistant for studying, processing corporate documents or even researching for business decisions. You have a lot to look forward to – AI can also be a powerful “partner” for working with data, not just for content creation.

Subscribe so you don’t miss anything: